AreteTheory

Projects made by Ruben Bentley

A recent graduate from Durham University with a Masters degree in Theoretical Physics, specializing in the application of machine learning techniques to innovative methods in particle physics.

Currently, I am exploring projects involving gambling simulations, sports betting and analysis, time series modelling, and natural language processing. I am eager to find opportunities that will further my intellectual curiosity and allow me to apply my skills in a forward thinking environment.

Performing Loop Integration Using Neural Networks

Abstract: Neural network (NN) technology was used to integrate the Feynman parameterised integral for the 1 loop process of Higgs boson pair production, from a top loop, over a phase space region. Randomly sampled phase-space and Feynman parameters were used to obtain exact integrand values, that were then fitted to the derivative of the neural network. The neural network then evaluated these integrals over the trained region ≃ 10 times faster than the Monte Carlo integrator pySecDec, which integrates over specific phase-space configurations. Different activation functions were applied to the neural network to further the theoretical understanding and accuracy shown in the current literature. The performance of the architectures differed, because the shapes of their activation function’s derivatives affected the behaviour of their backpropagated gradients during training. The GELU based architecture was the most accurate, with a mean of 3.9 ± 0.2 correct digits over the trained phase-space region, beating the tanh-based network 3.4 ± 0.2 digits (the most accurate in the original literature). Larger batch sizes improved the accuracy of architectures, as the GELU based network obtained an accuracy of 3.4 ± 0.3 digits, when trained on a batch size 25 times smaller. Deep GELU networks were slightly less accurate 3.8 ± 0.2 correct digits. GELU based networks had better generalisation for the boundaries of the sample space, than the softsign, sigmoid, and tanh. The shape of the GELU’s first derivative made it less susceptible to dead node formation, than the other activation functions tested.

Keywords: Neural Network, Activation Function, GELU, Parametric integration, Backprop

Description: This is my Master's thesis, for which I achieved a first class mark, one of the highest in my cohort.

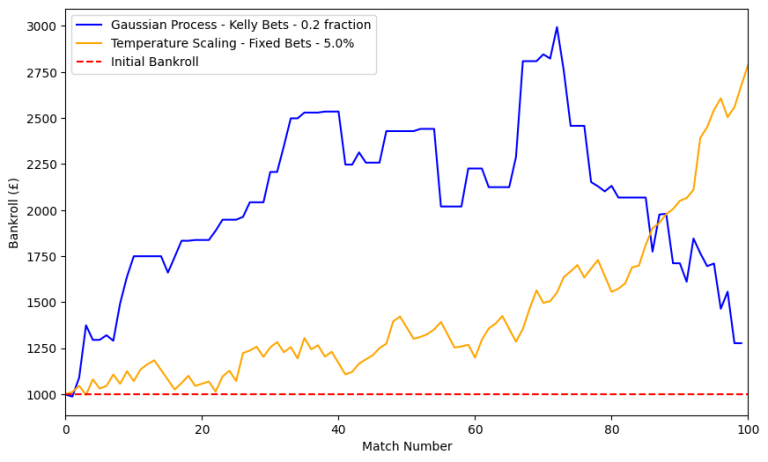

UFC Match Prediction and Analysis: Initial Report

Background: This brief initial report presents a proprietary deep learning model (AI) designed to analyse past UFC bouts, that will be used to understand and predict athlete performance, as well as match outcomes.

Significant value and potential was found in this model, therefore few aspects of the model are

addressed, and this report is intended to display its performance.

A vast database was collected and formed from various online resources. Features were engineered to obtain key performance metrics such as proportions, percentages, rates, and totals to quantify an athlete’s damage, offence, defence, and physical advantages. This data was used to train a deep learning model capable of predicting match outcomes with 71%

accuracy, as well as outcome probabilities for Kelly risk mitigation and capitalising on positive expected value opportunities.

UFC Data Science for Athlete Performance:

When consulting athletes, data science can reveal secrets behind dominant victories, when dealing with mathematically unfavourable match ups. This can be done by projecting in-match statistics and assisting the game plan formation process, to produce better outcomes within the prediction technology. Currently, we are assisting one UFC fighter, as he prepares for his next match

Current Performance of the model and future plans:

The current optimum betting strategy shows potential for 6000% annual profit margins.

Fortnightly updates will be uploaded to show the prediction model's progression and other athlete performance technologies.

Keno in Macau: A Brief Investigation of Its

Mechanics and Risks

Background: After my graduation, I went travelling around Asia, where I visited some casinos in Macau (MGM and Venetian). I was introduced to Keno, a game originating from ancient china with similarities to the modern lottery.

Keno is played on a board with 80 numbers, each round 20 random numbers are highlighted. Players select a specific number of "pins" (numbers), with the payout increasing as they correctly select more. However, the more pins you choose, the lower the payout for each correct pin. This creates an important trade-off: opting for more pins increases the probability of hitting a winning number, while fewer pins offer the potential for higher payouts.

Overview: This report investigates the 10-pin Keno game as played in Macau, exploring its odds and various nuances. I have investigated different betting strategies, the potential for spotting biases in the game, and what my experiences taught me about gambling in this exciting landscape.

Venetian

MGM

Future Considerations: Investigate other Keno games (all pin game possibilities), and develop strategies to mitigate long term risks in mathematically favourable circumstances. Explore the demographics of players, and identify common patterns in player behaviour using machine learning models.

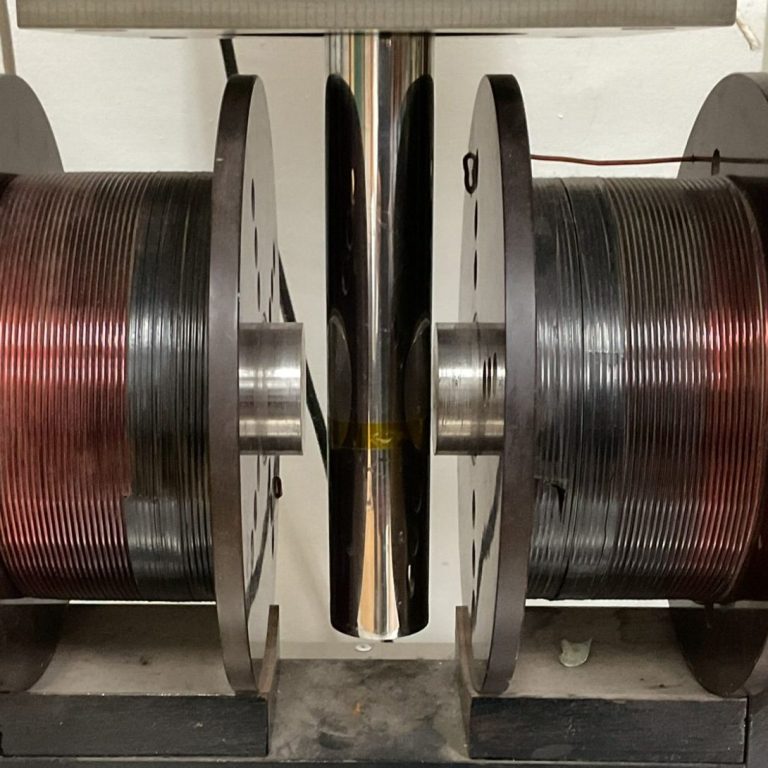

The effect of Anisotropy, due Macroscopic Strain and Bfield incident angle on YBCO strip’s critical temperature TC

Abstract: The anisotropy of commercial YBCO was proven by varying the angle between incident B field and the Copper oxide planes. It showed the two-dimensionality of YBCO’s superconductivity. The Tc decreased from (90.9±0.5)K to (89.8±0.6)K when the B field was at a Maxima. Macroscopic strains were applied on 3d printed bridges in No field and Tc did not change. When a B field was applied to the highest strain the Tc became (88.8±0.5)K. B field intensity on CuO2 has a negative correlation to Tc. The degrees of freedom in the experiment where reduced and showed that the largest contribution to error was the angle between the B field and the Copper oxide plane. BCS theory and the modern take on cuprate superconductivity was discussed

Description: This is was my 3rd year advanced laboratory project, for which I achieved a first class mark, second in my cohort for this field.

Potential Improvements: I could model the superconducting transition with a neural network, and use the derivative neural network architecture to find the critical temperature.

AreteTheory Match Prediction Software

4700% profit generated

6 Active Contributors

1 High Profile Athlete

©Copyright. All rights reserved.

We need your consent to load the translations

We use a third-party service to translate the website content that may collect data about your activity. Please review the details in the privacy policy and accept the service to view the translations.